Marcus Tomalin reflects on the Artificial Intelligence and Multimodality: From Semiotics to Intelligent Systems workshop which was a collaboration between the UCL Centre for Multimodal Research/Visual and Multimodal Research Forum and the Giving Voice to Digital Democracies project based at the University of Cambridge. The workshop brought together the fields of Artificial Intelligence (AI) and Multimodality, with the aim of identifying common ground that connects these two research domains.

The Giving Voice to Digital Democracies (GVDD) project, which is based at CRASSH’s Centre for the Humanities and Social Change and funded by the Humanities and Social Change International Foundation, hosted its first online workshop of 2021 on 14 June . This was a joint event that had been organised with the Centre for Multimodal Research at University College London, and it was dedicated to the memory of the influential Multimodality theorist Gunther Kress (1940 – 2019). The GVDD project investigates the social impact of Artificially Intelligent Communications Technology (AICT), while the Centre for Multimodal Research, which was established in 2006, explores multimodality from theoretical and practical perspectives, so the collaboration was an effective one. The organisers were Marcus Tomalin, who is the project manager of the GVDD project, and Sophia Diamantopoulou, who is a member of the Centre for Multimodal Research and the facilitator of the Visual and Multimodal Research Forum.

The workshop brought together researchers with interests in Artificial Intelligence (AI) and Multimodality (or both), with the aim of identifying common ground that connects these two research domains. Such an undertaking is timely since we live in a world that is increasingly multimodal. The internet, social media, mobile phones and other digital technologies have given prominence to forms of discourse in which texts, images and sounds combine to convey complex messages. Animated emojis, internet memes, the automatic captioning of live shows and e-literature are all illustrative examples of this trend. Since its emergence in the 1980s, the academic discipline of Multimodality has offered a set of robust analytical frameworks for examining multimodal phenomena. A careful consideration of such things enables the form and function of multimodal communication in human social interactions to be analysed in greater detail.

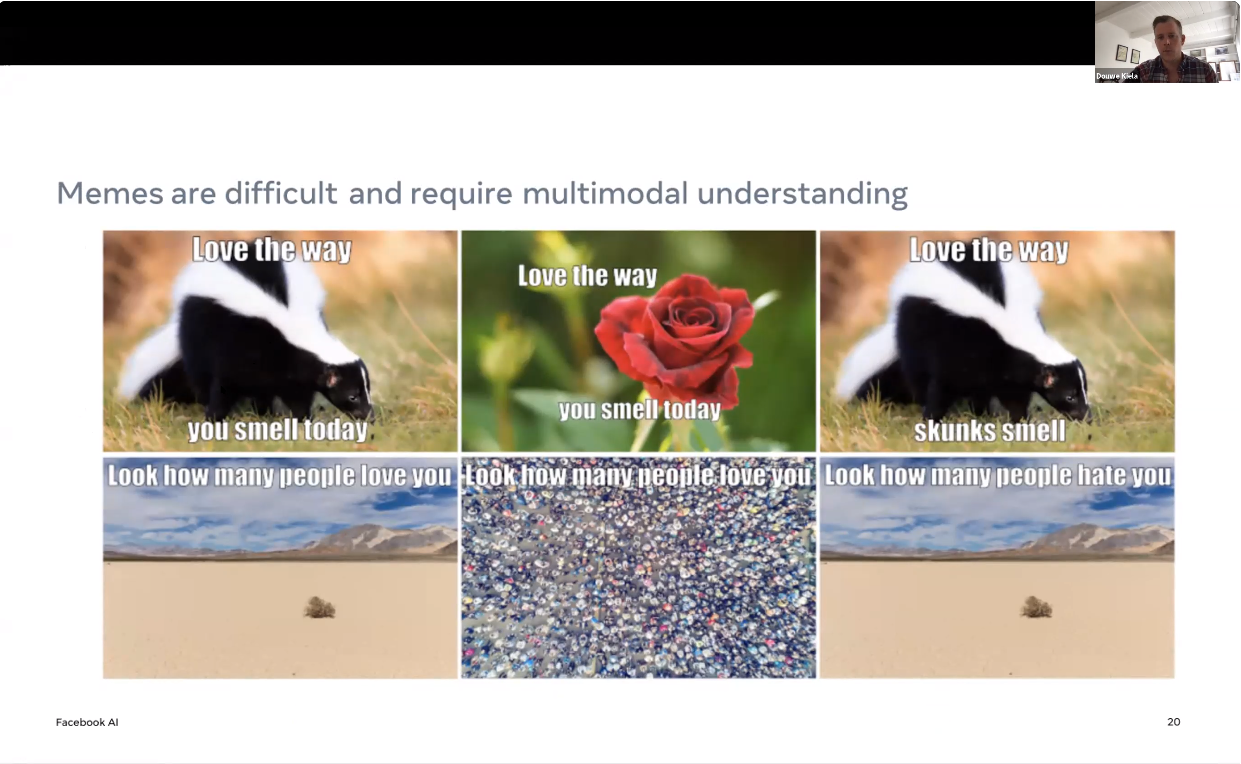

In a parallel development, over the last decade a new generation of AI systems has attained unprecedented levels of sophistication when performing generative and analytical tasks which, traditionally, only humans had accomplished convincingly. These advances have been triggered by the resurgence of neural-based machine-learning techniques which coincided with the greater availability of more effective hardware, such as Graphical Processing Units (GPUs), that facilitated parallel computation. The task of building state-of-the-art systems has benefited greatly from the release of free open-source software packages such as PyTorch (2016 – present) and Tensorflow (2017 – present) – and these advances have made it possible to create intelligent and autonomous systems that are increasingly multimodal. For instance, systems have been developed that can identify racist or sexist internet memes by processing texts as well as images (eg The Facebook Hateful Meme Challenge), while other researchers have created multimodal machine translation systems that perform image-guided translation of written or spoken texts. These multimodal tendencies are becoming increasingly prevalent in many modern AI applications.

However, despite their many obvious overlapping areas of interest, these two research domains remain largely disjunct. The computer scientists and information engineers who develop multimodal machine learning techniques tend to know very little about dominant theories of Multimodality while, conversely, the semioticians, linguists, philosophers, sociologists and social scientists who elaborate theories of Multimodality tend to know comparatively little about the architecture and application of multimodal AI systems. This persistent disconnection is regrettable since more extensive interactions involving researchers from both groups is likely to be mutually beneficial, and the workshop provided a valuable opportunity for AI researchers and Multimodality theorists alike to come together to exchange ideas and approaches in order to address crucial questions.

The event was divided into two sub-sections and the first, which focused on ‘Learning and Knowledge’, featured Kay O’Halloran (University of Liverpool), Lucia Specia (Imperial College London), Victor Lim Fei (Nanyang Technological University) and Nadia Berthouze (University College London). The resulting discussions explored a wide range of issues including the difficulty of obtaining and labelling high-quality data sets for multimodal AI research, the challenges facing state-of-the-art multimodal machine translation systems, the need for multimodal data analytics, the gender-related consequences of designing a multimodal persona for an automated system, and the difficulties of modelling multimodal communication when different modes convey contrary meanings (eg the facial expression implies one thing but the words spoken suggest the opposite).

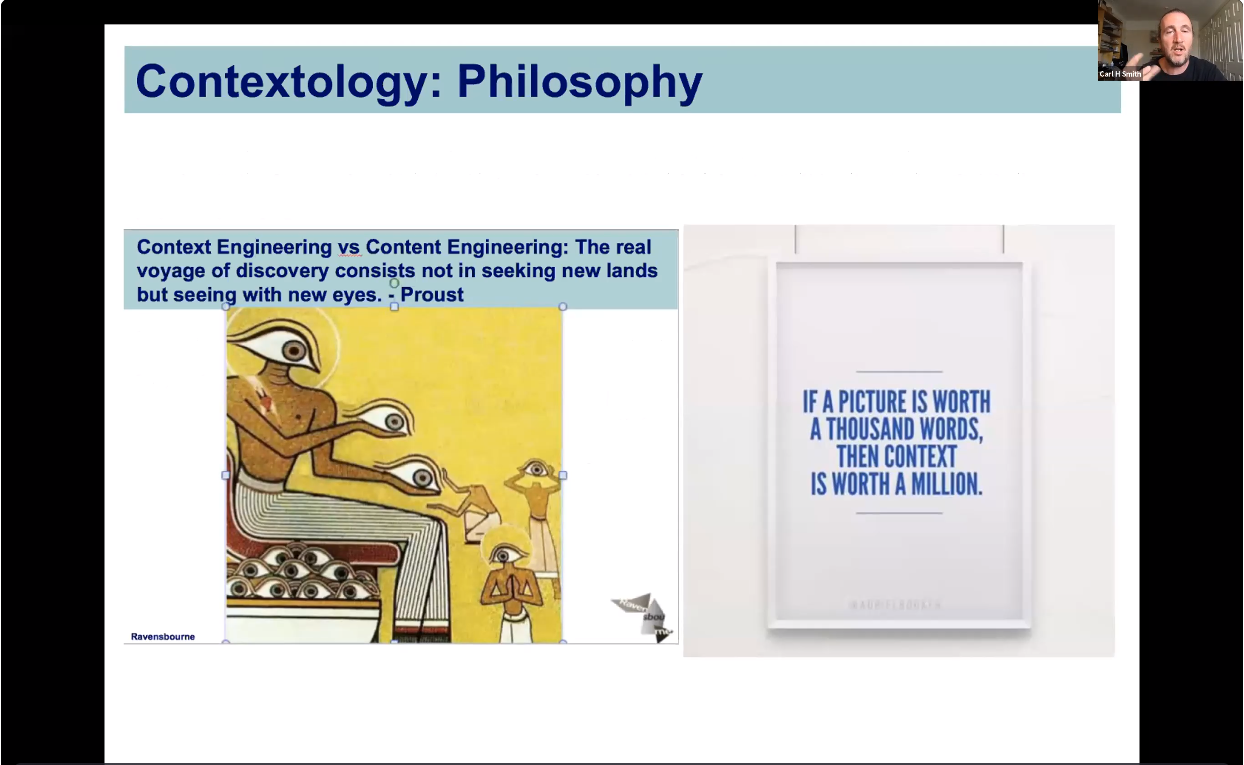

The second sub-section of the workshop focused on ‘Communication and Agency’ and the conversations involved Douwe Kiela (Facebook AI Research), Theo Van Leeuwen (University of Southern Denmark), Verena Rieser (Heriot-Watt University), Carl H Smith (Ravensbourne University London) and Rodney Jones (University of Reading). Some of the topics explored included the task of detecting hateful memes automatically online, the theoretical complexities that AI poses for social semiotic theories of multimodality, the multimodal implications of context engineering and mixed reality tools, and the consequences of the need for (multimodal) AI systems to make the world legible so that they can process it.

More than 260 people registered for the event. They were based in many different countries around the world, and questions from the audience were incorporated into the probing discussions that took place during each of the sessions. It is hoped that this workshop will simply be the first in a series of events that provide further opportunities for detailed discussion about the complex relationship between AI and Multimodality.